Understanding Auditory Misperceptions from Synthetic Voices

The Science Behind Machine-Generated Sound Illusions

Our brains have a remarkable ability to process and interpret sound, but this evolutionary advantage can lead to fascinating auditory misperceptions when encountering artificial voices. The human auditory processing system naturally seeks patterns and meaning in ambient sounds, often creating perceived speech where none exists. 카지노api

How AI Voice Technology Exploits Neural Processing

Modern artificial intelligence voice synthesis leverages our brain’s pattern-recognition tendencies by incorporating key elements of human speech:

- Vocal microtiming and breath patterns

- Natural speech cadence and inflection

- Subvocal resonances and vocal fry

- Prosodic features that mirror human conversation

The Cognitive Disconnect Phenomenon

When exposed to synthetic voices, our limbic system processes these sounds as natural speech, while our conscious mind simultaneously recognizes their artificial nature. This creates a unique perceptual contradiction that illuminates the complexity of human-machine audio interaction.

White Noise and Pattern Recognition

White noise interpretation demonstrates how readily our brains construct meaning from random acoustic signals. This tendency toward auditory pareidolia helps explain why listeners often perceive distinct words or phrases in machine-generated sounds, even when no such linguistic content exists.

Neural Pathways and Sound Processing

The interaction between our deep neural pathways and conscious awareness reveals fundamental aspects of human sound processing:

- Automatic gap-filling in audio streams

- Recognition of speech-like patterns

- Integration of contextual audio cues

- Unconscious sound interpretation mechanisms

This understanding of machine-human audio interaction continues to advance our knowledge of both artificial voice technology and human cognitive processing.

The Science Behind Audio Illusions

The Science Behind Audio Illusions: Understanding Auditory Perception

Neural Mechanisms of Sound Processing

The fascinating world of auditory illusions parallels the more commonly known optical illusions, operating through complex mechanisms within our brain’s processing centers.

The auditory cortex interprets sound waves and creates meaning from vibrations, often completing incomplete patterns and making assumptions based on stored auditory memories.

Three Primary Types of Audio Illusions

Categorical Perception

Sound processing in the brain automatically sorts ambiguous audio inputs into distinct, recognizable categories. This fundamental mechanism helps organize our auditory environment but can lead to compelling illusions when sounds fall between established categories.

Temporal Processing

The brain’s interpretation of rapid sound sequences can create remarkable perceptual effects. These temporal illusions emerge when multiple audio signals arrive in quick succession, challenging our ability to accurately process timing and sequence.

Contextual Effects

Environmental audio processing significantly influences how we perceive individual sounds. The surrounding acoustic information shapes our interpretation of specific audio elements, demonstrating the brain’s sophisticated pattern-recognition capabilities.

Notable Audio Illusion Phenomena

The McGurk effect demonstrates the powerful interaction between visual and auditory processing, where visual lip movements can dramatically alter sound perception.

Similarly, the Shepard tone illusion creates an impossible acoustic paradox of seemingly endless ascending or descending pitch, revealing the intricate nature of our auditory processing system.

These predictable patterns in sound perception showcase the brain’s remarkable ability to construct coherent auditory experiences from complex sensory inputs, making audio illusions valuable tools for understanding human perception.

#

When Machines Speak Like Humans

# When Machines Speak Like Humans

The Evolution of Speech Synthesis Technology

Speech synthesis technology has evolved dramatically to replicate human vocal patterns with unprecedented accuracy.

Modern text-to-speech systems now incorporate subtle nuances like breath patterns, vocal fry, and microsecond pauses that were once exclusive to human speech. These advancements represent a quantum leap in artificial voice generation.

Key Elements of Synthetic Speech

Prosody and Vocal Mechanics

Neural networks have mastered three critical components of natural speech:

- Prosody: The rhythm and intonation patterns

- Phoneme blending: Seamless sound transitions

- Emotional modulation: Natural variation in tone and affect

Advanced Voice Characteristics

Deep learning models demonstrate remarkable sophistication in replicating individual voice traits, including:

- Regional accents

- Age-related vocal patterns

- Speech variations and impediments

- Natural speech cadence

The Human-Machine Convergence

The advancement of speech synthesis technology has reached a pivotal milestone where synthetic voices frequently pass as human in blind tests.

This breakthrough in natural language processing represents significant progress in human-machine interaction, while raising important considerations about voice authentication and digital communication security.

Impact on Communication Technology

- Enhanced user interfaces

- Accessibility solutions

- Voice assistant applications

- Digital content creation

The convergence of artificial and human speech capabilities marks a transformative moment in technological evolution, fundamentally changing how we interact with machines and process vocal communications.

Our Brain’s Pattern Recognition System

Understanding Our Brain’s Pattern Recognition System

The Neural Foundation of Auditory Pattern Recognition

The human brain possesses remarkable pattern recognition capabilities, particularly in processing and interpreting auditory information.

This sophisticated system operates through evolved neural networks that continuously analyze incoming sound waves, transforming seemingly random acoustic signals into meaningful information.

These networks function similarly to advanced speech recognition systems, but with far greater adaptability and contextual understanding.

Multi-Level Processing in Pattern Recognition

Pattern recognition processing occurs simultaneously across multiple neural levels.

The system begins with basic acoustic processing, where individual phonemes and syllables are identified and categorized. Voices From the Machine: Auditory Misperceptions

Higher-level processing then integrates these elements into complex language patterns, matching them against stored linguistic templates in our memory. This hierarchical approach enables rapid and efficient speech comprehension across various contexts.

Pattern Recognition and False Positives

Our brain’s pattern matching system sometimes exhibits heightened sensitivity, leading to false pattern recognition. This phenomenon explains common experiences such as:

- Hearing voices in ambient noise

- Detecting phantom phone notifications

- Perceiving familiar words in foreign languages

- Finding recognizable patterns in random sounds

While these pattern recognition errors might seem disadvantageous, they represent an evolutionary adaptation that enhanced survival by promoting heightened environmental awareness and threat detection.

This overactive pattern matching served as a crucial protective mechanism throughout human development, allowing our ancestors to quickly identify both potential dangers and opportunities in their environment.

Evolutionary Advantages of Pattern Recognition

The brain’s tendency toward active pattern seeking reflects its evolution as a survival mechanism. This system helped early humans:

- Detect predator movements

- Recognize warning calls

- Identify communication signals

- Process environmental cues

These capabilities remain integral to modern human cognition, though they now operate in vastly different contexts.

Digital Voices and Emotional Response

Digital Voices and Their Impact on Human Emotional Response

Understanding Neural Processing of Synthetic Speech

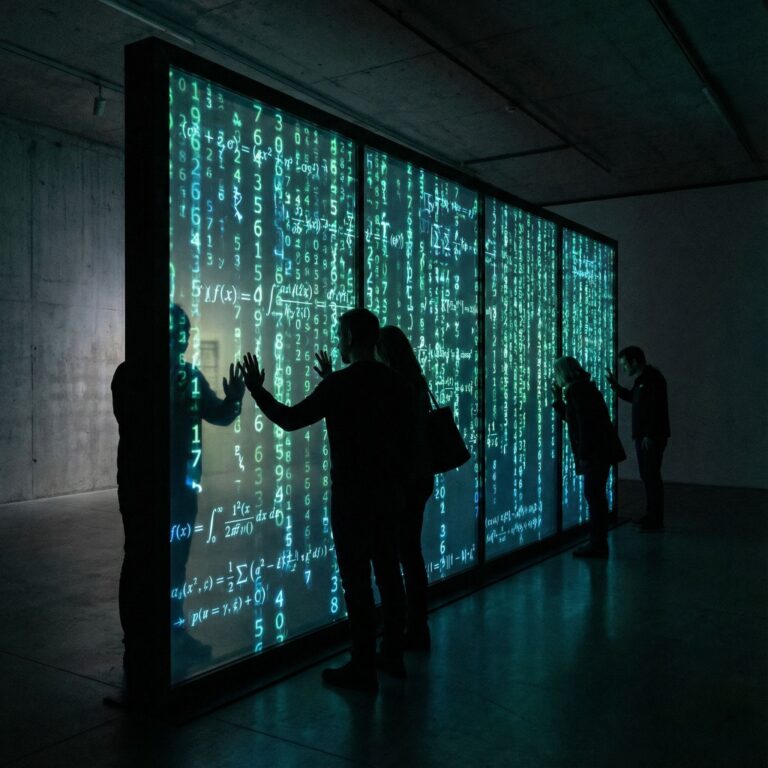

Digital voice technology fundamentally alters how our brains process and respond to auditory communication.

While the conscious mind clearly recognizes synthetic speech patterns, the limbic system processes these artificial voices similarly to natural human speech. This creates a fascinating cognitive paradox where emotional responses occur despite our awareness of the voice’s artificial nature.

The Engineering Behind Emotional Connection

Voice assistant technology like Siri and Alexa employs sophisticated engineering to trigger specific emotional responses.

These systems utilize carefully calibrated combinations of pitch modulation, rhythmic patterns, and tonal variations to establish trust and comfort. The strategic implementation of these elements demonstrates how digital voice design directly influences user engagement and emotional connection.

The Authenticity Paradox in Voice Design

Research reveals that digital voices incorporating subtle imperfections generate stronger emotional engagement than those striving for technical perfection. This phenomenon aligns with human evolutionary psychology, where natural speech contains minor variations and inconsistencies.

The inclusion of carefully crafted vocal imperfections creates a more authentic communication experience, leading to enhanced user trust and emotional resonance.

Neural Response Patterns and Digital Communication

The way our brains process synthetic speech creates a unique duality in our response mechanisms. While higher cognitive functions acknowledge the artificial nature of these voices, deeper neural pathways respond as if engaging with natural human speech.

This cognitive disconnect provides valuable insights into human-machine interaction and the future development of more effective digital voice interfaces.

Ghost Words in White Noise

Understanding Ghost Words in White Noise: Audio Pareidolia Explained

The Science Behind Phantom Audio Patterns

When exposed to white noise, the human brain demonstrates a remarkable tendency to perceive distinct words and phrases that don’t physically exist in the audio signal. This phenomenon, known as audio pareidolia, showcases our neural system’s powerful pattern-recognition capabilities in action.

Psychological Factors Influencing Sound Perception

The manifestation of ghost words strongly correlates with listeners’ psychological state and recent experiences. Research demonstrates that individuals primed to expect specific types of messages often report hearing corresponding content – those anticipating religious content frequently detect prayers or biblical phrases, while those experiencing anxiety commonly perceive threatening communications.

The Persistence of Auditory Illusions

What makes audio pareidolia particularly compelling is its resistance to rational explanation. Even when listeners understand they’re hearing random noise, their brains continue constructing meaningful patterns from the chaos.

This mechanism mirrors other perceptual phenomena, such as visual pareidolia in cloud-watching. The occurrence of multiple listeners reporting identical phantom words suggests that shared cultural and linguistic frameworks significantly influence these auditory illusions, revealing how our brain actively constructs meaning rather than passively processing sound input.

Key Characteristics of White Noise Perception

- Pattern recognition in random audio signals

- Cultural influence on perceived content

- Psychological state affecting interpretation

- Persistent illusions despite awareness

- Shared perceptual experiences among listeners

Training AI for Natural Speech

Training AI Systems for Natural Speech Generation

Fundamentals of AI Speech Processing

Modern artificial intelligence speech systems achieve remarkable naturalism through sophisticated machine learning algorithms processing vast datasets of human dialogue.

The development of natural-sounding AI speech requires precise calibration of prosodic elements, including pitch modulation, rhythmic patterns, and stress placement that characterize authentic human communication.

Core Elements of Natural Speech AI

Three fundamental components drive successful AI speech synthesis:

- Phoneme sequencing optimization

- Emotional context detection

- Micro-pause implementation

These systems must master the intricate process of combining sound units while maintaining authentic sentence-level intonation.

Advanced AI speech models analyze emotional indicators within text to dynamically adjust vocal characteristics, creating contextually appropriate output.

Advanced Speech Pattern Recognition

The most sophisticated aspect of natural speech AI development involves replicating authentic human speech patterns.

Algorithmic analysis of extensive conversation databases reveals subtle timing variations essential for organic-sounding output.

Implementation of randomized micro-variations effectively eliminates artificial cadence, while specialized timing calibration systems process thousands of recorded interactions to identify and reproduce the nuanced patterns characteristic of natural human dialogue.

Future of Synthetic Voice Technology

The Future of Synthetic Voice Technology: A Comprehensive Analysis

Revolutionary Advances in AI-Generated Speech

Recent breakthroughs in synthetic voice technology are revolutionizing human-machine interaction.

AI-powered speech synthesis is reaching unprecedented levels of authenticity, becoming virtually indistinguishable from natural human voices.

This transformation is poised to reshape multiple industries, from digital healthcare solutions to immersive entertainment experiences.

Key Technological Developments

1. Emotional Voice Synthesis

Advanced emotional synthesis represents a crucial breakthrough in voice technology. These systems can now replicate complex emotional states, incorporating subtle variations in tone, pitch, and rhythm. This development enables more natural human-AI interactions and creates opportunities for enhanced user engagement across platforms.

2. Real-Time Voice Cloning

Voice cloning technology has evolved to enable instant replication of voice patterns. This advancement opens new possibilities in personalized content creation and accessibility solutions. However, this capability necessitates robust ethical frameworks and security measures to prevent misuse.

3. Multilingual Processing

Advanced language processing systems now capture intricate linguistic nuances, including regional accents and cultural expressions. This breakthrough enables truly global communication solutions and enhances cross-cultural understanding through technology.

Transforming Accessibility and Communication

The impact of synthetic voice technology on accessibility services is particularly significant. Adaptive voice assistants now offer personalized support for diverse user needs, including:

- Language learning enhancement through accent-perfect pronunciation

- Speech therapy applications with real-time feedback

- Customized voice interfaces for different abilities and preferences

Future Applications and Considerations

The evolution of synthetic voice technology requires careful balance between innovation and responsible implementation. Key considerations include:

- Privacy protection in voice data collection

- Ethical use guidelines for voice replication

- Quality assurance standards for synthetic speech

- Cross-platform integration capabilities

These developments mark a new era in human-machine interaction, promising enhanced communication experiences while maintaining ethical integrity.